When it comes to books I’m really old school. Starting from the pleasure of discovering a book you’ve been dying to find, nestled between two otherwise forgettable books in the store, to the crinkling goodness of a new book, the reflexive care to not damage the spine unduly, inscriptions from decades past in second-hand books, the smell, the texture, everything. And don’t even get me started on the religious experience of visiting your favourite libraries. Stated another way, e-books are just fundamentally incompatible with my reading experience.

That is, until I had to move houses last year. It is not a pleasant experience to have to cart around a few hundred books, even within the same city. This, and the fact that some Dan McGirt books that I’ve wanted to read are only really available to me in e-book form finally pushed me to actually buy the Amazon Kindle.

My precioussss

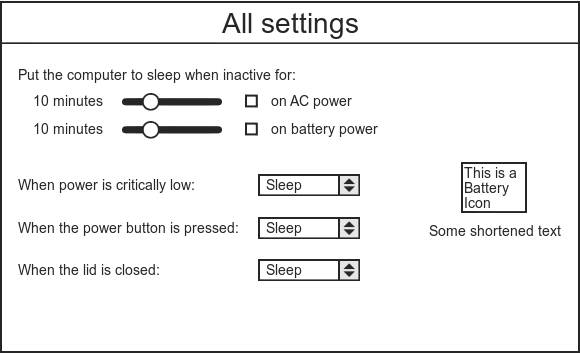

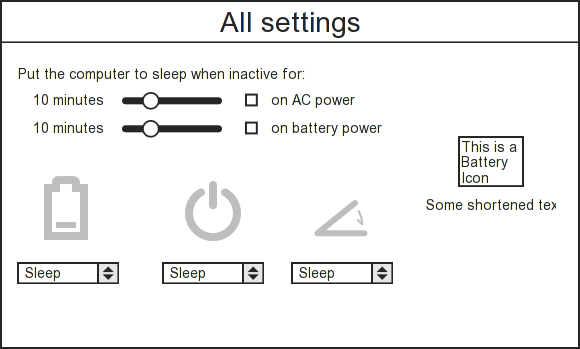

About 3 months ago, I got a black Kindle 3G (the 3rd revision). Technical reviews abound, so I’m not going to talk about the technology much. I didn’t see any articles that really spoke about

using it, which is far more relevant to potential buyers (I’m sure they’re there, I didn’t find any good ones is all). So this is my attempt at describing the bits of the Kindle experience that are relevant to others of my ilk (the ones who nodded along to the first paragraph, especially :D).

The Device

We’ll I’m a geek, I can’t avoid talking about the technology completely, but I’ll try to keep it to a minimum (also, it runs Linux, woohoo! :D ed: and GStreamer too, as Sebastian Dröge points out!).

I bought the Kindle with the 6″ display and free wireless access throughout the world (<insert caveat about coverage maps here>). The device itself is really slick, the build quality is good. They keys on the keyboard feel hard to press, but this is presumably intentional, because you don’t want to randomly press keys while handling the device.

At first glance, the e-ink display on the new device is brilliant, the contrast in daylight is really good (more about this later). It’s light, and fairly easy to use (but I have a really high threshold for complex devices, so don’t take my word for it). The 3G coverage falls back to 2G mode in India. I’ve tried it around a bit in India, and the connectivity is pretty hit-or-miss. Maybe things will change for the better with the impending 3G rollout.

The battery life is either disappointing or awesome, depending on whether you’ve got wireless enabled or not. This is a bit of a nag, but you quickly get used to just switching off the wireless when you’re done shopping or browsing.

Reading

Obviously the meat and drink of this device is the reading experience. It is not the same as reading a book. There are a lot of small, niggling differences that will keep reminding you that you’re not reading a book, and this is something you’re just going to have to accept if you’re getting the device.

Firstly the way you hold the device is going to be different from holding a book. I generally hold a book along the spine with one hand, either at the top or bottom (depending on whether I’m sitting, lying down, etc.). You basically cannot hold the Kindle from above — there isn’t enough room. I alternate between holding the device on my palm (but it’s not small enough to hold comfortably like that, your mileage will vary depending on the size of your hand), grasping it between my fingers around the bottom left or right edge (this is where the hard keys on the keyboard help — you won’t press a key by mistake in this position), or I just rest the Kindle on a handy surface (table or lap while sitting, tummy while supine :) ).

Secondly, the light response of the device is very different from books. Paper is generally not too picky about the type of lighting (whiteness, diffused or direct, etc.) In daylight, the Kindle looks like a piece of white paper with crisp printing, which is nice. However, at night, it depends entirely on the kind of lighting you have. My house has mostly yellow-ish fluorescent lamps, so the display gets dull unless the room is very well lit. I also find that the contrast drops dramatically if the light source is not behind you (diffuse lighting might not be so great, in other words). There are some angles at which the display reflects lighting that’s behind/above you, but it’s not too bad.

The fonts and spacing on the Kindle are adjustable and this is one area in which it is hard to find fault with the device. Whatever your preference is in print (small fonts, large fonts, wide spacing, crammed text), you can get the same effect across all your books.

Flipping pages looks annoying when you see videos of the Kindle (since flipping requires a refresh of the whole screen), but in real life it’s fast enough to not annoy.

The Store

I’ve only used the Kindle Store from India, and in a word, it sucks. The number of books available is rubbish. I don’t care if they have almost(?) a million books, but if they don’t have Good Omens, Cryptonomicon, or most of Asimov’s Robot series, they’re fighting a losing battle as far as I’m concerned (these are all books that I’ve actually wanted to read/re-read since I got the Kindle).

When I do find a book I want, the pricing is inevitably ridiculous. I do not see what the publishers are smoking, but could someone please tell them that charging more than 2 times the price of a paperback for an e-book is just plain stupid? Have they learned nothing from the iTunes story? Speaking of which, the fact that the books I buy are locked by DRM to Kindle devices is very annoying.

While the reading experience is something I can get used to, this is the biggest problem I currently have. From my perspective, books have been the last bastion of purity where piracy is not the only available solution to work around the inability of various industry middlemen to find a reasonable way to deal with the Internet and it’s impact on creative content. I am really hoping that Amazon will get enough muscle soon to pull an Apple on the book industry and get the pricing to reasonable levels. And possibly go one step further and break down country-wise barriers. Otherwise, we’re just going to have to deal with another round of rampant piracy and broken systems to try to curb it.

(Editor’s note: This bit clearly bothers me a lot and deserves a blog post of its own, but let’s save that for another day)

The Ecosystem

A lot of my family and friends love reading books, and a large number of the books I buy go through many hands before finding their final resting place on my shelf. This is not just a matter of cost — there is a whole ecosystem of sharing your favourite books with like-minded people, discussing, and so on.

The Kindle device itself isn’t conducive to sharing (if I’m reading a book on the device, nobody else can use the device, obviously). Interestingly Amazon has recently introduced the idea of sharing books from the Kindle (something Barnes and Noble has had for a while). You can share books you’ve bought off the Kindle Store with someone else with a Kindle account, once, for a period of 2 weeks. This in itself is a really lame restriction, but even something more relaxed would be useless to me. Almost nobody I know has a device that supports the Kindle software (phones and laptops/desktops do not count as far as I am concerned).

So in my opinion, the complete break from the reading ecosystem is a huge negative for the Kindle experience. When I know I’m going to want to lend a book to someone, I immediately eliminate the possibility of buying it off the Kindle Store. This is true of all e-books, of course, and might become less of an issue in decades to come, but it is a real problem today.

Other Fluff

The Kindle comes with support for MP3s, browsing the Internet and some games (some noises about an app store have also been made). These are just fluff — I don’t care if my reading device has any of these things. Display technology is still quite far from getting to a point where convergence is possible without compromising the reading experience (yes, I’m including the Pixel Qi display in this assertion, but my opinion is only based on the several videos of devices using these displays).

The Verdict

Honestly, it’s not clear to me whether the Kindle is a keeper or not. It’s definitely a very nice device, technically. I think it’s possible for Amazon to improve the reading experience — I’m sure the display technology will get better with regards to response to different kinds of lighting. Some experimentation with design to make it work with standard reading postures would be nice too. The Kindle Store is a disaster for me, and I really hope Amazon and the publishing industry get their act together.

Maybe this article will be helpful to potential converts out there. If you’ve got questions about the Kindle or anything to add that I’ve missed, feel free to drop a comment.