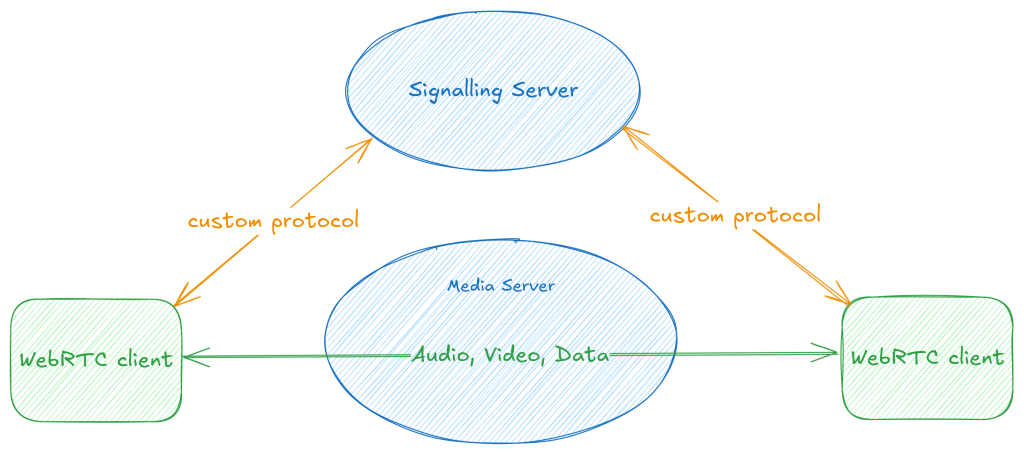

The WebRTC nerds among us will remember the first thing we learn about WebRTC, which is that it is a specification for peer-to-peer communication of media and data, but it does not specify how signalling is done.

Or put more simply, if you want call someone on the web, WebRTC tells you how you can transfer audio, video and data, but it leaves out the bit about how you make the call itself: how do you locate the person you’re calling, let them know you’d like to call them, and a few following steps before you can see and talk to each other.

While this allows services to provide their own mechanisms to manage how WebRTC calls work, the lack of a standard mechanism means that general-purpose applications need to individually integrate each service that they want to support. For example, GStreamer’s webrtcsrc and webrtcsink elements support various signalling protocols, including Janus Video Rooms, LiveKit, and Amazon Kinesis Video Streams.

However, having a standard way for clients to do signalling would help developers focus on their application and worry less about interoperability with different services.

Standardising Signalling

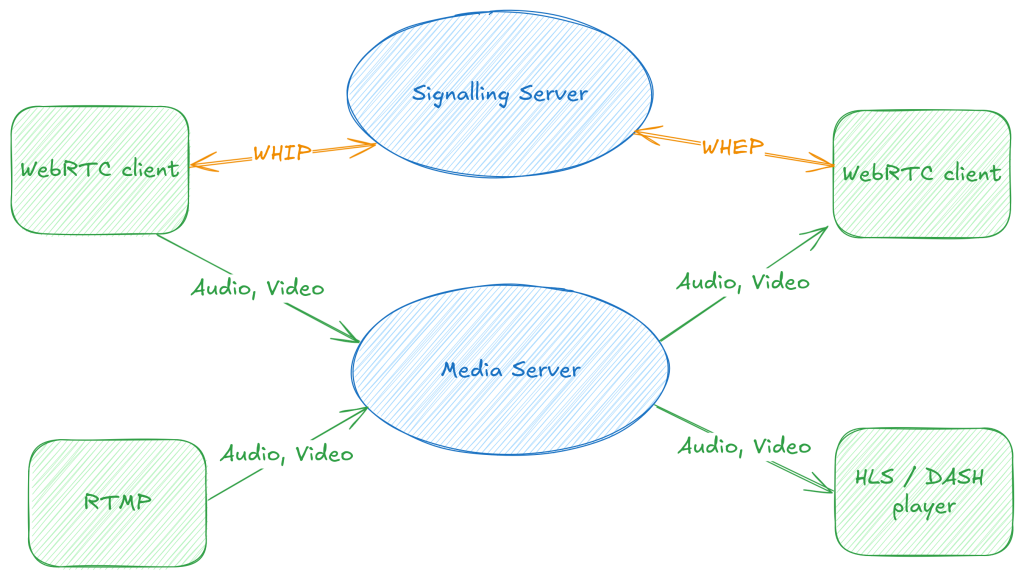

With this motivation, the IETF WebRTC Ingest Signalling over HTTPS (WISH) workgroup has been working on two specifications:

- WebRTC-HTTP Ingestion protocol (WHIP)

- WebRTC-HTTP Egress Protocol (WHEP)

(author’s note: the puns really do write themselves :))

As the names suggest, the specifications provide a way to perform signalling using HTTP. WHIP gives us a way to send media to a server, to ingest into a WebRTC call or live stream, for example.

Conversely, WHEP gives us a way for a client to use HTTP signalling to consume a WebRTC stream – for example to create a simple web-based consumer of a WebRTC call, or tap into a live streaming pipeline.

With this view of the world, WHIP and WHEP can be used both for calling applications, but also as an alternative way to ingest or play back live streams, with lower latency and a near-ubiquitous real-time communication API.

In fact, several services already support this including Dolby Millicast, LiveKit and Cloudflare Stream.

WHIP and WHEP with GStreamer

We know GStreamer already provides developers two ways to work with WebRTC streams:

-

webrtcbin: provides a low-level API, akin to thePeerConnectionAPI that browser-based users of WebRTC will be familiar with -

webrtcsrcandwebrtcsink: provide high-level elements that can respectively produce/consume media from/to a WebRTC endpoint

At Asymptotic, my colleagues Tarun and Sanchayan have been using these building blocks to implement GStreamer elements for both the WHIP and WHEP specifications. You can find these in the GStreamer Rust plugins repository.

Our initial implementations were based on webrtcbin, but have since been moved over to the higher-level APIs to reuse common functionality (such as automatic encoding/decoding and congestion control). Tarun covered our work in a talk at last year’s GStreamer Conference.

Today, we have 4 elements implementing WHIP and WHEP.

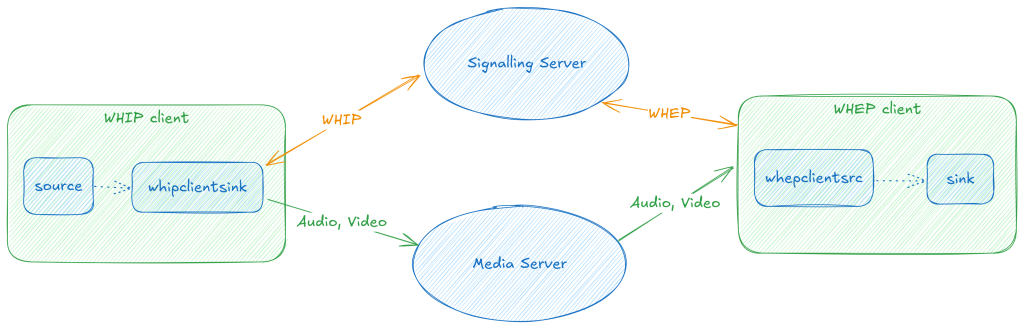

Clients

whipclientsink: This is awebrtcsink-based implementation of a WHIP client, using which you can send media to a WHIP server. For example, streaming your camera to a WHIP server is as simple as:

|

1 2 3 4 |

gst-launch-1.0 -e \ v4l2src ! video/x-raw ! queue ! \ whipclientsink signaller::whip-endpoint="https://my.webrtc/whip/room1" |

whepclientsrc: This is work in progress and allows us to build player applications to connect to a WHEP server and consume media from it. The goal is to make playing a WHEP stream as simple as:

|

1 2 3 4 |

gst-launch-1.0 -e \ whepclientsrc signaller:whep-endpoint="https://my.webrtc/whep/room1" ! \ decodebin ! autovideosink |

The client elements fit quite neatly into how we might imagine GStreamer-based clients could work. You could stream arbitrary stored or live media to a WHIP server, and play back any media a WHEP server provides. Both pipelines implicitly benefit from GStreamer’s ability to use hardware-acceleration capabilities of the platform they are running on.

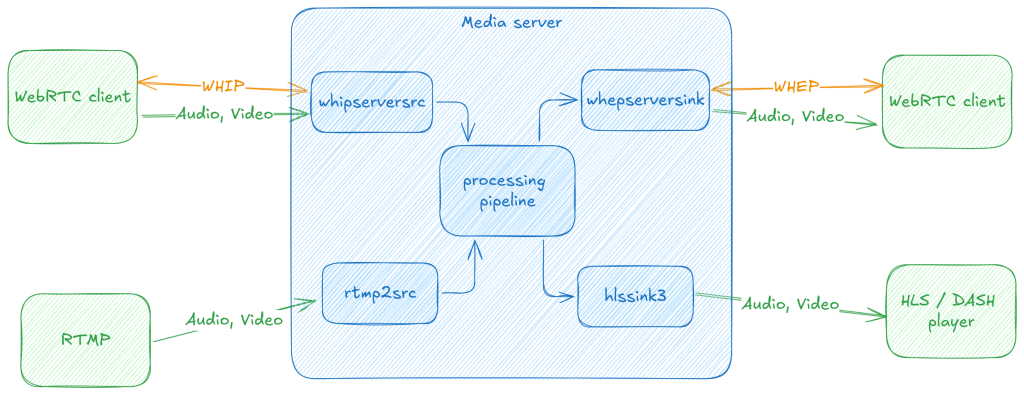

Servers

-

whipserversrc: Allows us to create a WHIP server to which clients can connect and provide media, each of which will be exposed as GStreamer pads that can be arbitrarily routed and combined as required. We have an example server that can play all the streams being sent to it. -

whepserversink: Finally we have ongoing work to publish arbitrary streams over WHEP for web-based clients to consume this media.

The two server elements open up a number of interesting possibilities. We can ingest arbitrary media with WHIP, and then decode and process, or forward it, depending on what the application requires. We expect that the server API will grow over time, based on the different kinds of use-cases we wish to support.

This is all pretty exciting, as we have all the pieces to create flexible pipelines for routing media between WebRTC-based endpoints without having to worry about service-specific signalling.

If you’re looking for help realising WHIP/WHEP based endpoints, or other media streaming pipelines, don’t hesitate to reach out to us!

Ben

September 6, 2024 — 11:08 pm

Not related to your excellent write up here, I was just curious as to what program you used to make those diagrams? I use Gaphor alot myself, but those diagrams are amazing!

Arun

September 10, 2024 — 5:39 pm

o/ Ben, Gaphor looks interesting! I use Excalidraw for this diagram. It can export to SVG/PNG and it’s open source too (not sure how easy it is to actually self-host).

Mathieu Duponchelle

September 7, 2024 — 5:18 pm

Excited to get those WIP WHEP elements upstreamed, now that the WHIP elements are no longer WIP.

Arun

September 10, 2024 — 5:39 pm

[applause]