A lesser known, but particularly powerful feature of GStreamer is our ability to play media synchronised across devices with fairly good accuracy.

The way things stand right now, though, achieving this requires some amount of fiddling and a reasonably thorough knowledge of how GStreamer’s synchronisation mechanisms work. While we have had some excellent talks about these at previous GStreamer conferences, getting things to work is still a fair amount of effort for someone not well-versed with GStreamer.

As part of my work with the Samsung OSG, I’ve been working on addressing this problem, by wrapping all the complexity in a library. The intention is that anybody who wants to implement the ability for different devices on a network to play the same stream and have them all synchronised should be able to do so with a few lines of code, and the basic know-how for writing GStreamer-based applications.

I’ve started work on this already, and you can find the code in the creatively named gst-sync-server repo.

Design and API

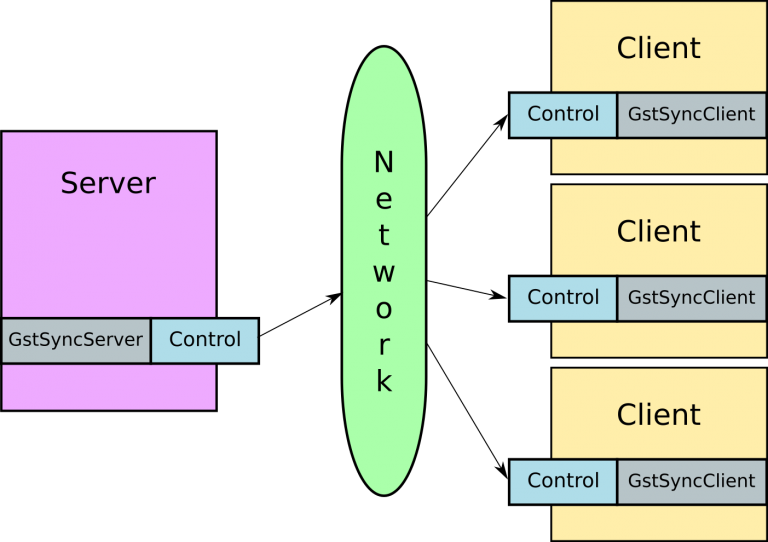

Let’s make this easier by starting with a picture …

Let’s say you’re writing a simple application where you have two ore more devices that need to play the same video stream, in sync. Your system would consist of two entities:

A server: this is where you configure what needs to be played. It instantiates a

GstSyncServerobject on which it can set a URI that needs to be played. There are other controls available here that I’ll get to in a moment.A client: each device would be running a copy of the client, and would get information from the server telling it what to play, and what clock to use to make sure playback is synchronised. In practical terms, you do this by creating a

GstSyncClientobject, and giving it aplaybinelement which you’ve configured appropriately (this usually involves at least setting the appropriate video sink that integrates with your UI).

That’s pretty much it. Your application instantiates these two objects, starts them up, and as long as the clients can access the media URI, you magically have two synchronised streams on your devices.

Control

The keen observers among you would have noticed that there is a control entity in the above diagram that deals with communicating information from the server to clients over the network. While I have currently implemented a simple TCP protocol for this, my goal is to abstract out the control transport interface so that it is easy to drop in a custom transport (Websockets, a REST API, whatever).

The actual sync information is merely a structure marshalled into a JSON string and sent to clients every time something happens. Once your application has some media playing, the next thing you’ll want to do from your server is control playback. This can include

- Changing what media is playing (like after the current media ends)

- Pausing/resuming the media

- Seeking

- “Trick modes” such as fast forward or reverse playback

The first two of these work already, and seeking is on my short-term to-do list. Trick modes, as the name suggets, can be a bit more tricky, so I’ll likely get to them after other things are done.

Getting fancy

My hope is to see this library being used in a few other interesting use cases:

Video walls: having a number of displays stacked together so you have one giant display — these are all effectively playing different rectangles from the same video

Multiroom audio: you can play the same music across different speakers in a single room, or multiple rooms, or even group sets of speakers and play different media on different groups

Media sharing: being able to play music or videos on your phone and have your friends be able to listen/watch at the same time (a silent disco app?)

What next

At this point, the outline of what I think the API should look like is done. I still need to create the transport abstraction, but that’s pretty much a matter of extracting out the properties and signals that are part of the existing TCP transport.

What I would like is to hear from you, my dear readers who are interested in using this library — does the API look like it would work for you? Does the transport mechanism I describe above cover what you might need? There is example code that should make it easier to understand how this library is meant to be used.

Depending on the feedback I get, my next steps will be to implement the transport interface, refine the API a bit, fix a bunch of FIXMEs, and then see if this is something we can include in gst-plugins-bad.

Feel free to comment either on the Github repository, on this blog, or via email.

And don’t forget to watch this space for some videos and measurements of how GStreamer synchronised fares in real life!

Jens Georg

November 9, 2016 — 3:50 pm

Great! Rygel patches welcome! \o/ :D

Arun

November 10, 2016 — 9:09 pm

:D As I add more features, there should be ideas on what we can add in Rygel to make use of this.

Jens Georg

November 15, 2016 — 3:02 pm

There’s a UPnP/DLNA standard extension (CLOCKSYNC or sth like that) I have kind of an umbrella ticket open at https://bugzilla.gnome.org/show_bug.cgi?id=757681 where I started gathering random resources, not sure how this all fits together in the end.

Arun

November 17, 2016 — 6:14 pm

Sweet, following the bug and let’s see what we can come up with

Stu

November 10, 2016 — 1:14 am

I’m building a videowall right now that could have used this.

Some things to solve: handle disconnections and reconnection gracefully (and handle gstreamer clock – not something I’ve looked into yet).

Some sort of playlist API

since it might take 150ms or more to get across Wi-Fi, you need to know what to play next before the current item has finished playing.

For images you need to know how long to display them for, since they eos immediately.

Transformations – for a videowall each screen can display a part of a larger virtual image.

Arun

November 10, 2016 — 9:12 pm

So the first two things I’m looking at after some API cleanups (that are more or less done now) are two things you mention — playlists, and transformations.

Images should be relatively straight-forward to add on top of this (I’ll add it to the TODO).

Connection/disconnection is handled gracefully — a client can turn up at any time and be in sync. The server deals with clients going away fine too. I also want to be able to deal with dynamically updating client transformations based on this (which I intend to have from the get-go while implementing transformations).

Not sure what you’re referring to with regards to the GStreamer clock.

Angel

November 11, 2016 — 2:26 am

Let’s say audio and video come from different servers, but both streams should be played in sync.

Have you considered syncing playback in a setup with multiple servers and multiple clients?

Arun

November 14, 2016 — 7:27 am

This is not a feature I’ve explicitly targeted, but it’s very doable — how it’s done is described in Sebastian’s talk, which I linked to (https://gstconf.ubicast.tv/videos/synchronised-multi-room-media-playback-and-distributed-live-media-processing-and-mixing-with-gstreamer/)

It shouldn’t be too hard to add the metdata into the control protocol to enable this.

Mikl

November 17, 2016 — 5:03 pm

How accurate and fast you are gonna to be?

In my case, i have amount of devices (>10) on a field. Some of them are wired. Other are wireless. They have different meaning: video source, audio, information providers, event registrations. All should be synchronized in a milliseconds.

Will you handle time sync, delays, timeouts, watch dog, wrong packet order,….

If it is so, we can to start work together on this library. Or it is not your scope?

Arun

November 17, 2016 — 6:17 pm

The framework I’m building should allow you to do all this — I’ve seen sub 5 ms (worst case) sync on pretty terrible hardware over wifi, so the degree of synchronisation should be easy enough.

My plan to do multi-room is make it generic, so that you can have client groups, and each group can have its own stream. This should fit what you need for clients to be able to play different things.

Delay, timeouts, watchdog, packet order etc. are dependent on the transport, which is pretty much a plug-what-you-want mechanism, with a default TCP transport which at least takes care of packet order.

Contributions are welcome, of course!