Unfortunately, the previous Bluetooth audio standards (BR/EDR and A2DP – used

by most Bluetooth audio devices you’ve come across) were not well-suited for

these use-cases, especially from a power-consumption perspective. This meant

that HA users would either have to rely on devices using proprietary protocols

(usually limited to Apple devices), or have a cumbersome additional dongle with

its own battery and charging needs.

Recent Past: Bluetooth LE

The more recent Bluetooth LE specification addresses some of the issues with

the previous spec (now known as Bluetooth Classic). It provides a low-power

base for devices to communicate with each other, and has been widely adopted in

consumer devices.

On top of this, we have the LE Audio standard, which provides audio streaming

services over Bluetooth LE for consumer audio devices and HAs. The hearing aid

industry has been an active participant in its development, and we should see

widespread support over time, I expect.

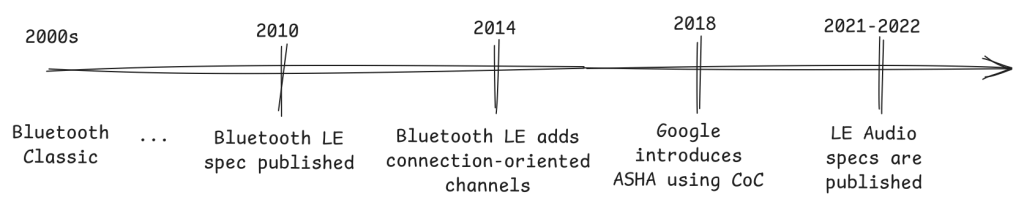

The base Bluetooth LE specification has been around from 2010, but the LE Audio

specification has only been public since 2021/2022. We’re still seeing devices

with LE Audio support trickle into the market.

In 2018, Google partnered with a hearing aid manufacturer to announce the ASHA

(Audio Streaming for Hearing Aids) protocol, presumably as a stop-gap. The

protocol uses Bluetooth LE (but not LE Audio) to support low-power audio

streaming to hearing aids, and is

publicly available.

Several devices have shipped with ASHA support in the last ~6 years.

Hot Take: Obsolescence is bad UX

As end-users, we understand the push/pull of technological advancement and

obsolescence. As responsible citizens of the world, we also understand the

environmental impact of this.

The problem is much worse when we are talking about medical devices. Hearing

aids are expensive, and are expected to last a long time. It’s not uncommon for

people to use the same device for 5-10 years, or even longer.

In addition to the financial cost, there is also a significant emotional cost

to changing devices. There is usually a period of adjustment during which one

might be working with an audiologist to tune the device to one’s hearing.

Neuroplasticity allows the brain to adapt to the device and extract more

meaning over time. Changing devices effectively resets the process.

All this is to say that supporting older devices is a worthy goal in itself,

but has an additional set of dimensions in the context of accessibility.

HAs and Linux-based devices

Because of all this history, hearing aid manufacturers have traditionally

focused on mobile devices (i.e. Android and iOS). This is changing, with Apple

supporting its proprietary MFi (made for iPhone/iPad/iPod) protocol on macOS,

and Windows adding support for LE Audio on Windows 11.

This does leave the question of Linux-based devices, which is our primary

concern – can users of free software platforms also have an accessible user

experience?

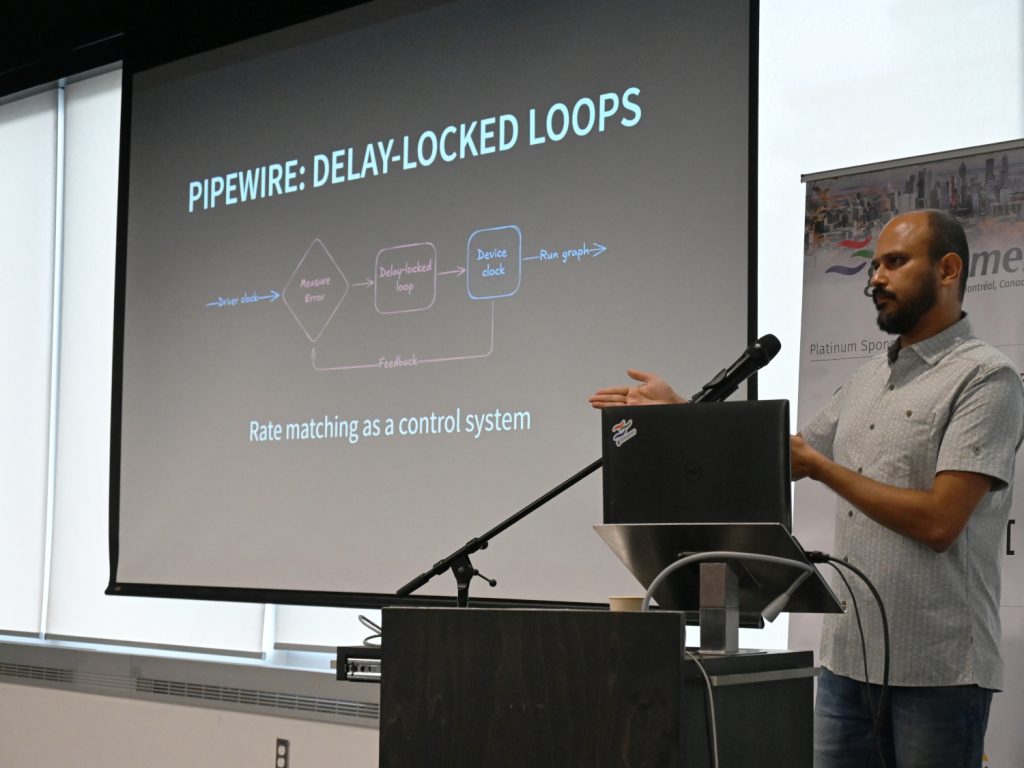

A lot of work has gone into adding Bluetooth LE support in the Linux kernel and

BlueZ, and more still to add LE Audio support. PipeWire’s Bluetooth module now

includes support for LE Audio, and there is continuing effort to flesh this

out. Linux users with LE Audio-based hearing aids will be able to take

advantage of all this.

However, the ASHA specification was only ever supported on Android devices.

This is a bit of a shame, as there are likely a significant number of hearing

aids out there with ASHA support, which will hopefully still be around for the

next 5+ years. This felt like a gap that we could help fill.

Step 1: A Proof-of-Concept

We started out by looking at the ASHA specification, and the state of Bluetooth

LE in the Linux kernel. We spotted some things that the Android stack exposes

that BlueZ does not, but it seemed like all the pieces should be there.

Friend-of-Asymptotic,

Ravi Chandra Padmala spent

some time with us to implement a proof-of-concept. This was a pretty intense

journey in itself, as we had to identify some good reference hardware (we found

an ASHA implementation on the onsemi

RSL10),

and clean out the pipes between the kernel and userspace (LE

connection-oriented channels, which ASHA relies on, weren’t commonly used at

that time).

We did eventually get the

proof-of-concept done, and this

gave us confidence to move to the next step of integrating this into BlueZ –

albeit after a hiatus of paid work. We have to keep the lights on, after

all!

Step 2: ASHA in BlueZ

The BlueZ audio plugin implements various audio profiles within the BlueZ

daemon – this includes A2DP for Bluetooth Classic, as well as BAP for LE

Audio.

We decided to add ASHA support within this plugin. This would allow BlueZ to

perform privileged operations and then hand off a file descriptor for the

connection-oriented channel, so that any userspace application (such as

PipeWire) could actually stream audio to the hearing aid.

I implemented an initial version of the ASHA profile in the BlueZ audio plugin

last year, and thanks to Luiz Augusto von Dentz’

guidance and reviews, the plugin has

landed upstream.

This has been tested with a single hearing aid, and stereo support is pending.

In the process, we also found a small community of folks with deep interest in

this subject, and you can join us on #asha on the

BlueZ Slack.

Step 3: PipeWire support

To get end-to-end audio streaming working with any application, we need to

expose the BlueZ ASHA profile as a playback device on the audio server (i.e.,

PipeWire). This would make the HAs appear as just another audio output, and we

could route any or all system audio to it.

My colleague, Sanchayan Maity, has been working

on this for the last few weeks. The code is all more or less in place now, and

you can track our progress on the PipeWire

MR.

Step 4 and beyond: Testing, stereo support, …

Once we have the basic PipeWire support in place, we will implement stereo

support (the spec does not support more than 2 channels), and then we’ll have a

bunch of testing and feedback to work with. The goal is to make this a solid

and reliable solution for folks on Linux-based devices with hearing aids.

Once that is done, there are a number of UI-related tasks that would be nice to

have in order to provide a good user experience. This includes things like

combining the left and right HAs to present them as a single device, and access

to any tuning parameters.

Getting it done

This project has been on my mind since the ASHA specification was announced,

and it has been a long road to get here. We are in the enviable position of

being paid to work on challenging problems, and we often contribute our work

upstream. However, there are many such projects that would be valuable to

society, but don’t necessarily have a clear source of funding.

In this case, we found ourselves in an interesting position – we have the

expertise and context around the Linux audio stack to get this done. Our

business model allows us the luxury of taking bites out of problems like this,

and we’re happy to be able to do so.

However, it helps immensely when we do have funding to take on this work

end-to-end – we can focus on the task entirely and get it done faster.

Onward…

I am delighted to announce that we were able to find the financial support to

complete the PipeWire work! Once we land basic mono audio support in the MR

above, we’ll move on to implementing stereo support in the BlueZ plugin and the

PipeWire module. We’ll also be testing with some real-world devices, and we’ll

be leaning on our community for more feedback.

This is an exciting development, and I’ll be writing more about it in a

follow-up post in a few days. Stay tuned!