I wrote about our time at the GStreamer Conference in October, and one important thing I was able to do is spend some time with all-around great guy George reflecting on where the GStreamer plugins for PipeWire are, and what we need to do to get them to a rock-solid state.

This is a summary of our conversation, in the form of a to-do list of sorts…

Status Quo

Currently, we have two elements: pipewiresrc and pipewiresink. The two plugins work with both audio and video, and instantiate a PipeWire capture and playback stream, respectively. The stream, as with any PipeWire client, appears as a node in the PipeWire.

Buffers are managed in the GStreamer pipeline using bufferpools, and recently Wim re-enabled exposing the stream clock as a GStreamer clock.

There have been a number of issues that have cropped up over time, and we’ve been plugging away at addressing them, but it was worth stepping back and looking at the whole for a bit.

Use Cases

The straightforward uses of these elements might be to represent client streams: pipewiresrc might connect to an audio capture device (like a microphone), or video capture device (like a webcam), and provide the data for downstream elements to consume. Similarly pipewiresink might be used to play audio to the system output (speakers or headphones, perhaps).

Because of the flexibility of the PipeWire API, these elements may also be used to provide a virtual capture or playback device though. So pipewiresrc might provide a virtual audio sink, which applications could connect to to stream audio over the network (like say a WebRTC stream).

Conversely, it is possible to use pipewiresink to provide a virtual capture device – for example, the pipeline might generate a video stream and expose it a virtual camera for other applications to use.

We might even combine the two cases, one might connect to a webcam as a client, apply some custom video processing, and then expose that stream back as a virtual camera source as easily as:

|

|

pipewiresrc target-object="MyCamera" ! <some video filters> ! \ pipewiresink provide=true stream-properties="props,media.class=Video/Source,media.role=Camera" |

So we have a minor combinatorial explosion across 3 axes, and all combinations are valid:

pipewiresrc vs. pipewiresink- audio vs. video

- stream vs. virtual device

For each of these combinations, we might have different behaviour across the various issues below.

Split ’em up?

Before we look at specific issues, it is worth pointing out that the PipeWire elements are unusual in that they support both audio and video with the same code. This seems like a tantalisingly elegant idea, and it’s quite neat that we are able to get this far with this unified approach.

However, as we examine the specific issues we are seeing, it does seem to emerge that the audio and video paths diverge in several ways. It may be time to consider whether the divergence merits just splitting them up into separate audio and video elements.

Linking

The first issue that comes to mind is how we might want PipeWire or WirePlumber to manage linking the nodes from the GStreamer pipeline with other nodes (devices or streams).

For the playback/capture stream use-cases, we would want the nodes to automatically be connected to a sink/source node when the GStreamer pipeline goes to the PAUSED or PLAYING state, and for that link to be torn down when leaving those states. It might be possible for the link to “move” if, for example, the default playback or capture device changes, though a “move” is really the removal of the current link with a new link following.

For the virtual device use-cases, the pipeline state should likely follow the link state. That is, when a node is connected to our virtual device, we want the GStreamer pipeline to start producing/consuming data, and when disconnected, it should go back to “sleep”, possibly running again later.

The latter is something that a GStreamer application using these plugins might have to manage manually, but simplifying this and supporting this via gst-launch-1.0 for easy command-line use would be nice to have.

There are already the beginnings of support for such usage via the provide property on pipewiresink, but more work is needed for this to make this truly usable.

Bufferpools

Closely related to linking are buffers and bufferpools, as the process of linking nodes is what makes buffers for data exchange available to PipeWire nodes.

While bufferpools are a valuable concept for memory efficiency and avoiding unnecessary memcpy()s, they come with some complexity overhead in managing the pipeline. For one, as the number of buffers in a bufferpool is limited, it is possible to exhaust the set of buffers (with a large queue for example).

There are also some lifecycle complexities that arise from links coming and going, as the corresponding buffers also then go away from under us, something that GStreamer bufferpools are not designed for.

A solution to the first problem might be to avoid using bufferpools for some cases (for example, they might not be very valuable for audio). The solution to the lifecycle problem is a trickier one, and no clear answer is apparent yet, at least with the APIs as they stand.

We might also need to support resizing bufferpools for some cases, and that is not something that is easy to support with how buffer management currently happens in PipeWire (the stream API does not really give us much of a handle on this).

Formats

In order to support the various use-cases, we want to be able to support both a fixed format (if we know what we are providing), or a negotiated format (if we can adapt in the GStreamer pipeline based on what PipeWire has/wants).

There is also a large surface area of formats that PipeWire supports that we need to make sure we support well:

- There are known issues with some planar video formats being presented correctly from

pipewiresrc

- We do not expose planar audio formats, although both GStreamer and PipeWire support them

- Support for DSD and passthrough audio (e.g. Dolby/DTS over HDMI) needs to be wired up

- Support for compressed formats (we added PipeWire support for decode + render on a DSP)

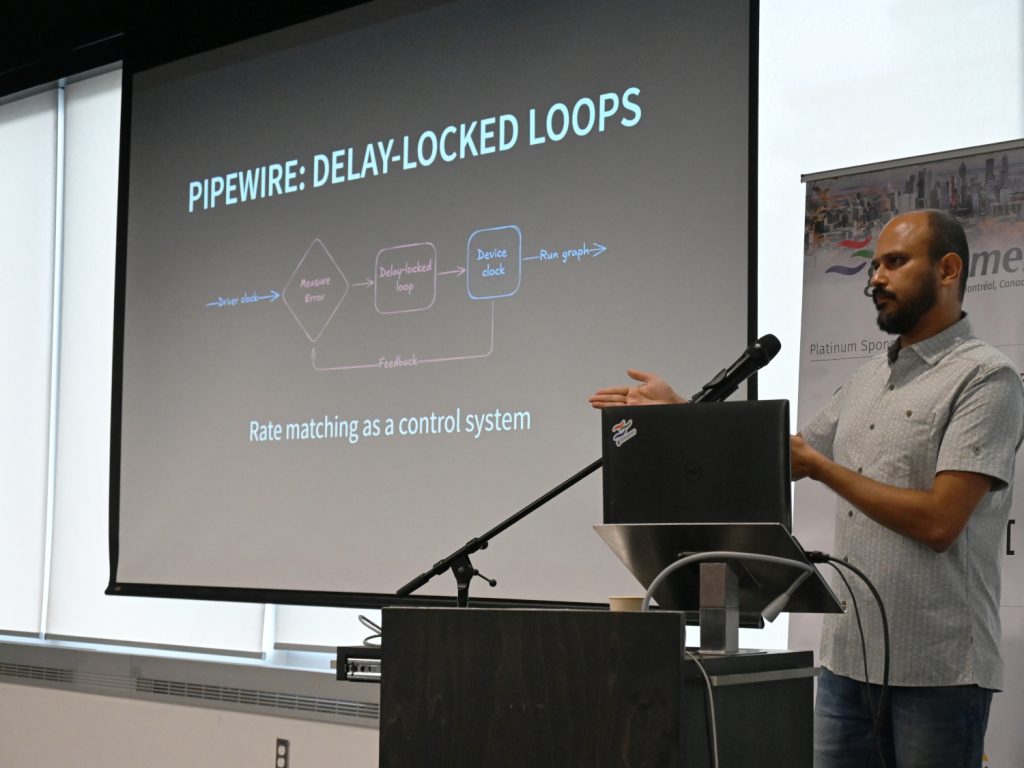

Rate matching

While Wim recently added some rate matching code to pipewiresink, there is work to be done to make sure that if there is skew between the GStreamer pipeline’s data rate and the audio device rate, we can use PipeWire’s rate adaptation features to compensate for such skew. This should work in both pipewiresink and pipewiresrc.

For some background on this topic, check out my talk on clock rate matching from a couple of months ago.

Device provider conflicts

While we are improving the out-of-the-box experience of these elements, unfortunately the PipeWire device provider currently supersedes all others (the GStreamer Device Provider API allows for discovering devices on the system and the elements used to access them).

The higher rank might make sense for video (as system integrators likely want to start preferring PipeWire elements over V4L2), but it can lead to a bad experience for audio (the PulseAudio elements work better today via PipeWire’s PulseAudio emulation layer).

We might temporarily drop the rank of PipeWire elements for audio to avoid autoplugging them while we fix the problems we have.

Probing formats

We create a “probe” stream in pipewiresink while getting ready to play audio, in order to discover what formats the device we would play to supports. This is required to detect supported formats and make decisions about whether to decode in GStreamer, what sample rate and format are preferred, etc.

Unfortunately, that also causes a “false” playback device startup sequence, which might manifest as a click or glitch on some hardware. Having a way to set up a probe that does not actually open the device would be a nice improvement.

Player state

There are a couple of areas where policy actions do not always surface well to the application/UI layer. One instance of this is where a stream is “corked” (maybe because only one media player should be active at a time) – we want to let the player know it has been paused, so it can update its state and let the UI know too. There is limited infrastructure for this already, via GST_MESSAGE_REQUEST_STATE.

Also, more of a session management (i.e. WirePlumber / system integration) thing, we do not really have the concept of a system-wide media player state. This would be useful if we want to exercise policy like “don’t let any media player play while we’re on a call”, and have that state be consistent across UI interactions (i.e. hitting play during a call does not trigger playback, maybe even lets the user know why it’s not working / provides an override).