This one’s going to be a bit of a long post. You might want to grab a cup of coffee before you jump in!

Over the last few years, I’ve spent some time getting PulseAudio up and running on a few Android-based phones. There was the initial Galaxy Nexus port, a proof-of-concept port of Firefox OS (git) to use PulseAudio instead of AudioFlinger on a Nexus 4, and most recently, a port of Firefox OS to use PulseAudio on the first gen Moto G and last year’s Sony Xperia Z3 Compact (git).

The process so far has been largely manual and painstaking, and I’ve been trying to make that easier. But before I talk about the how of that, let’s see how all this works in the first place.

The Problem

If you have managed to get by without having to dig into this dark pit, the porting process can be something of an exercise in masochism. More so if you’re in my shoes and don’t have access to any of the documentation for the audio hardware. Hardware vendors and OEMs usually don’t share these specifications unless under NDA, which is hard to set up as someone just hacking on this stuff as an experiment or for fun in their spare time.

Broadly, the task involves looking at how the devices is set up on Android, and then replicating that process using the standard ALSA library, which is what PulseAudio uses (this works because both the Android and generic Linux userspace talk to the same ALSA-based kernel audio drivers).

Android’s configuration

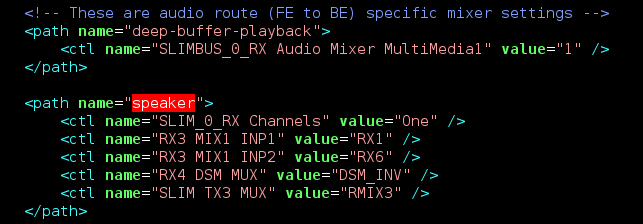

First, you look at the Android audio HAL code for the device you’re porting, and the corresponding mixer paths XML configuration. Between the two of these, you get a description of how you can configure the hardware to play back audio in various use cases (music, tones, voice calls), and how to route the audio (headphones, headset, speakers, Bluetooth).

In this example, there is one path that describes how to set up the hardware for “deep buffer playback” (used for music, where you can buffer a bunch of data and let the CPU go to sleep). The next path, “speaker”, tells us how to set up the routing to play audio out of the speaker.

These strings are not well-defined, so different hardware uses different path names and combinations to set up the hardware. The XML configuration also does not tell us a number of things, such as what format the hardware supports or what ALSA device to use. All of this information is embedded in the audio HAL code.

Configuring with ALSA

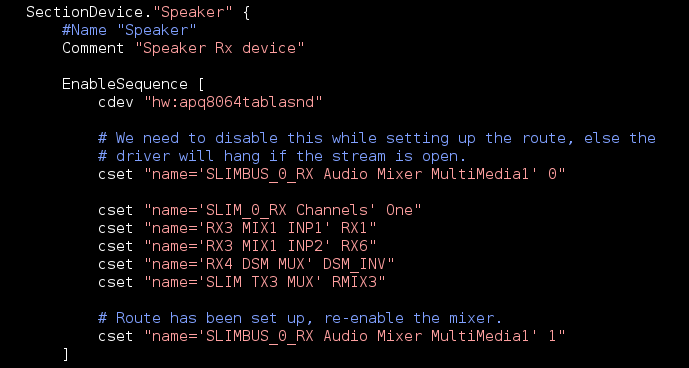

Next, you need to translate this configuration into something PulseAudio will understand1. The preferred method for this is ALSA’s UCM, which describes how to set up the hardware for each use case it supports, and how to configure the routing in each of those use cases.

This is a snippet from the “hi-fi” use case, which is the UCM use case roughly corresponding to “deep buffer playback” in the previous section. Within that, we’re looking at the “speaker device” and you can see the same mixer controls as in the previous XML file are toggled. This file does have some additional information — for example, this snippet specifies what ALSA device should be used to toggle mixer controls (“hw:apq8064tablasnd”).

Doing the Porting

Typically, I start with the “hi-fi” use case — what you would normally use for music playback (and could likely use for tones and such as well). Getting the “phone” use case working is usually much more painful. In addition to setting up the audio hardware similar to th “hi-fi use case, it involves talking to the modem, for which there isn’t a standard method across Android devices. To complicate things, the modem firmware can be extremely sensitive to the order/timing of setup, often with no means of debugging (a.k.a. fun times!).

When there is a new Android version, I need to look at all the changes in the HAL and the XML file, redo the translation to UCM, and then test everything again.

This is clearly repetitive work, and I know I’m not the only one having to do it. Hardware vendors often face the same challenge when supporting the same devices on multiple platforms — Android’s HAL usually uses the XML config I showed above, ChromeOS’s CrAS and PulseAudio use ALSA UCM, Intel uses the parameter framework with its own XML format.

Introducing xml2ucm

With this background, when I started looking at the Z3 Compact port last year, I decided to write a tool to make this and future ports easier. That tool is creatively named xml2ucm2.

As we saw, the ALSA UCM configuration contains more information than the XML file. It contains a description of the playback and mixer devices to use, as well as some information about configuration (channel count, primarily). This information is usually hardcoded in the audio HAL on Android.

To deal with this, I introduced a small configuration file that provides the additional information required to perform the translation. The idea is that you write this configuration once, and can more or less perform the translation automatically. If the HAL or the XML file changes, it should be easy to implement that as a change in the configuration and just regenerate the UCM files.

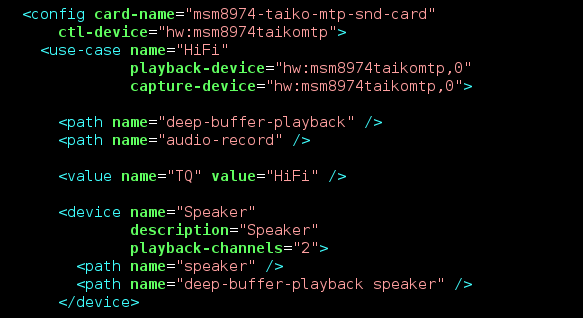

This example shows how the Android XML like in the snippet above can be converted to the corresponding UCM configuration. Once I had the code done, porting all the hi-fi bits on the Xperia Z3 Compact took about 30 minutes. The results of this are available as a more complete example: the mixer paths XML, the config XML, and the generated UCM.

What’s next

One big missing piece here is voice calls. I spent some time trying to get voice calls working on the two phones I had available to me (the Moto G and the Z3 Compact), but this is quite challenging without access to hardware documentation and I ran out of spare time to devote to the problem. It would be nice to have a complete working example for a device, though.

There are other configuration mechanisms out there — notably Intel’s parameter framework. It would be interesting to add support for that as well. Ideally, the code could be extended to build a complete model of the audio routing/configuration, and generate any of the configuration that is supported.

I’d like this tool to be generally useful, so feel free to post comments and suggestions on Github or just get in touch.

p.s. Thanks go out to Abhinav for all the Haskell help!

-

Another approach, which the Ubuntu Phone and Jolla SailfishOS folks take, is to just use the Android HAL directly from PulseAudio to set up and use the hardware. This makes sense to quickly enable any arbitrary device (because the HAL provides a hardware-independent interface to do so). In the longer term, I prefer to enable using UCM and alsa-lib directly since it gives us more control, and allows us to use such features as PulseAudio’s dynamic latency adjustment if the hardware allows it. ↩

-

You might have noticed that the tool is written in Haskell. While this is decidedly not a popular choice of language, it did make for a relatively easy implementation and provides a number of advantages. The unfortunate cost is that most people will find it hard to jump in and start contributing. If you have a feature request or bug fix but are having trouble translating it into code, please do file a bug, and I would happy to help! ↩

Yash shah

July 2, 2016 — 9:09 am

I am working as an embedded software engineer for more than 2 yrs. I have experience of writing application program for car infotaintment system that uses ALSA APIs. I basically have no deep knowledge about audio domain & development. I am very keen to work on audio domain but i am clueless about how to start. I have no one to guide me. I would request you to please suggest me how can I start to have the depth of knowledge you are having on audio domain so that even I could work on similar things & help contributing to open source world. Thanks

John

July 2, 2016 — 9:13 am

Ale tuséu pønarç du mejo hula?

viswanath

August 10, 2016 — 6:59 pm

Nice info, here i like to know about audio volume:media_volume or stream_music. My doubt is if we want to overwrite the Audio volume value and where is will change other than the default values either in device/// etc or in vendor. Please help me in my doubt.